Ethical AI in Video Content Management: Navigating Privacy, Bias & Ownership

by Nohad Ahsan, Last updated: February 26, 2025, Code:

This blog explores the ethical challenges businesses face when integrating ethical AI into Video Content Management systems. It covers critical issues like privacy concerns, algorithmic bias, transparency, and content ownership dilemmas. Learn how businesses can implement responsible AI practices to ensure fairness, protect user privacy, and maintain accountability. The guide offers actionable strategies for enterprises to navigate the complexities of AI-driven video management while balancing innovation with ethical responsibility.

Imagine leading a thriving enterprise where video content fuels communication, training, marketing, and customer engagement. With each passing day, the volume of videos grows exponentially—internal meetings, product demos, client interactions, and training modules. Managing this sea of content is no small feat. This is where Video Content Management integrated with AI becomes a game-changer.

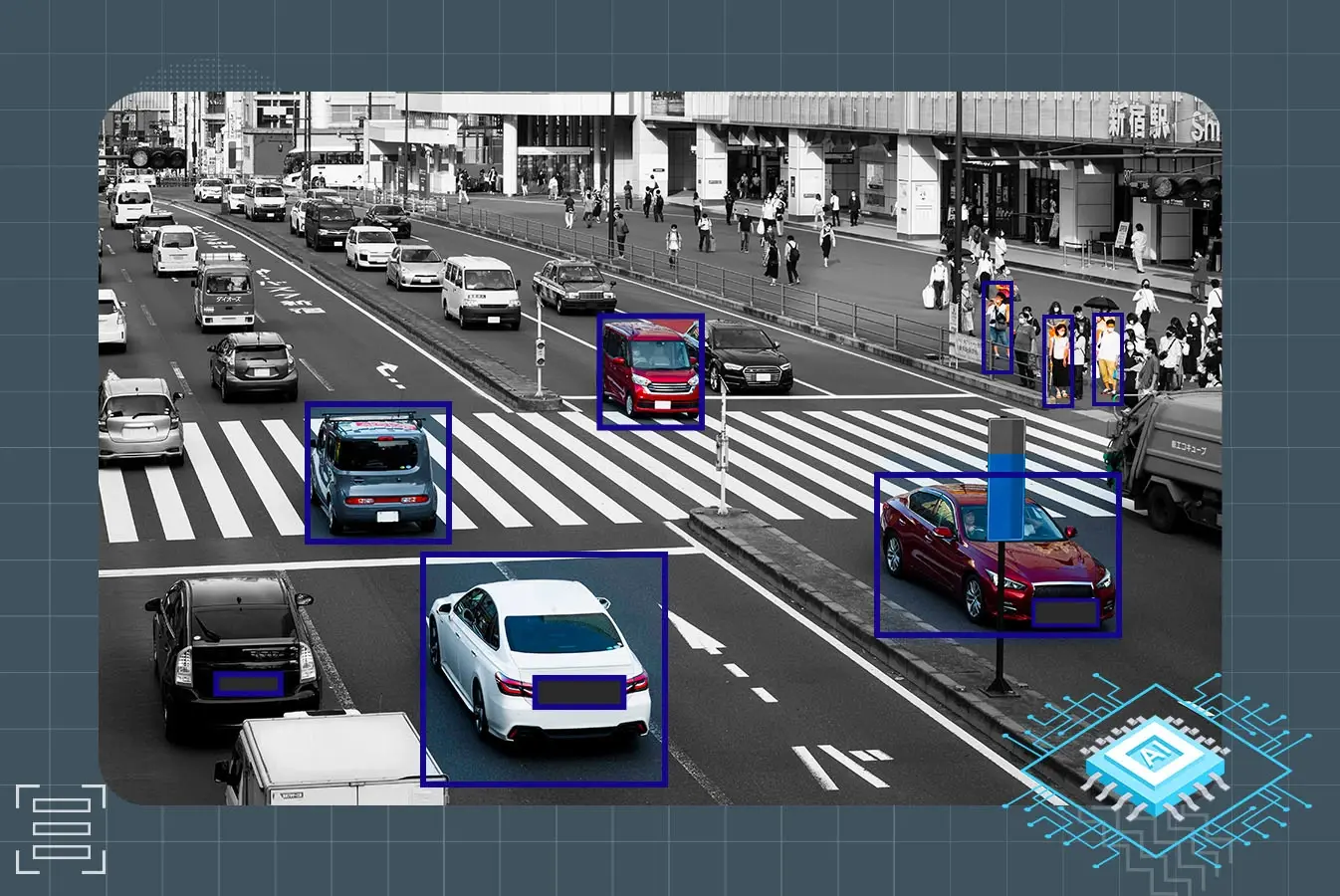

AI in Video Content Management revolutionizes how businesses organize, search, and analyze videos. It automates metadata tagging, enhances searchability, personalizes content recommendations, and provides valuable analytics. However, this technological leap comes with significant ethical challenges.

How do you protect sensitive information while leveraging AI’s powerful analytics? Can you ensure fairness and avoid bias in AI-generated content recommendations? And as AI takes on more creative roles in editing and generating content, who rightfully owns this AI-generated material?

As AI's role in Video Content Management expands, so do the ethical questions. Businesses must balance innovation with responsibility, ensuring Ethical AI Practices to safeguard privacy, fairness, and accountability.

In this blog, we explore the ethical challenges of using AI in Video Content Management, offering actionable insights to help enterprises navigate this complex landscape. Whether you’re an IT leader, content strategist, or compliance officer, understanding and addressing these ethical dilemmas is crucial for responsible and sustainable AI adoption.

The Growing Role of AI in Video Content Management

As enterprises embrace digital transformation, Video Content Management has emerged as a cornerstone of modern communication strategies. From internal training and client meetings to marketing campaigns and customer engagement, video is rapidly becoming the preferred medium for conveying information.

The Explosion of Video Content

The sheer volume of video content generated by businesses today is staggering. According to Cisco’s Visual Networking Index, video traffic is expected to account for over 82% of all consumer internet traffic by 2025. This explosive growth presents both opportunities and challenges for enterprises, particularly in managing, storing, and leveraging vast volumes of video data.

Traditional methods of content management are proving inadequate for this digital era. Enterprises need sophisticated solutions to organize, retrieve, and analyze video assets efficiently. This is where Video Content Management systems powered by Ethical AI come into play, offering scalable solutions for seamless storage, categorization, and retrieval of video content.

The Promise of AI

AI can make a significant impact in various ways within video content management, including:

- Automated Tagging and Metadata Generation: AI-driven tools can analyze video content and automatically generate relevant tags and metadata, making searching and organizing large volumes of video data much easier. This reduces manual labor and ensures that content can be easily accessed by the right people at the right time.

- Personalized Content Recommendations: AI can track user interactions and preferences to recommend videos most relevant to individual users. This improves engagement and ensures that employees, customers, or clients can easily find the content that best suits their needs.

- Enhanced Video Analytics: AI tools can analyze videos to extract critical insights, such as audience sentiment, viewer engagement, and video performance. This can help businesses make more informed decisions about their content strategies and better understand their audiences.

- Content Moderation: AI can automatically detect inappropriate or harmful content, ensuring that any video content shared within a platform adheres to company policies and ethical standards.

While the advantages are compelling, the ethical challenges accompanying AI adoption cannot be ignored. Businesses must implement Ethical AI Practices to enhance security, safeguard privacy, and ensure compliance with regulatory standards.

The Ethical Dilemma in AI-Driven Video Content Management

As enterprises increasingly integrate AI into Video Content Management, they encounter a double-edged sword: enhanced efficiency and scalability on one side and complex ethical challenges on the other. While AI offers powerful tools for automating metadata tagging, personalizing content recommendations, and moderating video content, its deployment raises significant ethical questions. Navigating this ethical landscape requires a careful balance between innovation and responsibility.

The Double-Edged Sword: Efficiency vs. Ethical Complexity

AI in Video Content Management brings unprecedented efficiency, enabling enterprises to manage massive video libraries, enhance searchability, and provide personalized user experiences. However, this efficiency comes with ethical complexities that must be addressed to maintain user trust, protect privacy, and ensure fairness.

- Privacy: Video content often contains sensitive personal data, confidential business discussions, or proprietary information. AI’s ability to analyze these videos raises questions about how this data is stored, shared, and used.

- Bias: AI systems are only as good as the data they are trained on. If biased data is used to train AI models, the outcomes can perpetuate these biases in the video content that is managed or recommended.

- Transparency and Accountability: AI systems are often called “black boxes,” meaning their decision-making processes are not always clear or understandable to humans. This lack of transparency can create issues of accountability, particularly when AI-driven decisions significantly impact employees or customers.

- Content Ownership: As AI becomes more involved in creating and editing video content, the issue of content ownership becomes more complicated. Who owns the rights to AI-generated video content? Is it the company that owns the AI, the person who created the video, or the AI system itself?

The Need for Ethical AI Practices

To navigate these ethical challenges effectively, businesses must adopt Ethical AI Practices that prioritize user privacy, mitigate bias, and promote transparency. This includes:

- Establishing ethical AI frameworks with clear guidelines on data usage, privacy protection, and decision-making transparency.

- Implementing bias detection and correction mechanisms, along with regular audits to ensure fairness and inclusivity.

- Maintaining accountability through transparent and explainable AI systems that document decision-making processes.

By adopting Ethical AI Practices, enterprises can leverage the power of AI in Video Content Management while safeguarding user trust, protecting privacy, and ensuring fairness and compliance.

Understanding the Ethical Challenges

The integration of AI in Video Content Management has brought about transformative efficiencies, but it also presents significant ethical challenges. These challenges revolve around privacy, bias, intellectual property, transparency, and employee surveillance. Navigating these complexities requires implementing Ethical AI Practices to ensure fairness, accountability, and respect for user autonomy.

Data Privacy and Security Risks

Data privacy is one of the most pressing concerns with AI in video content management. AI systems often need access to sensitive data to be effective. This could include personal information, facial recognition data, or insights into private conversations within videos. When businesses use AI to analyze video content, they must ensure that the data is handled responsibly and securely.

For example, AI systems used for facial recognition in video content can inadvertently violate privacy laws if the data is stored without proper consent or misused. Data breaches or misuse of personal information could result in significant legal consequences, including fines and damage to the company’s reputation.

To mitigate these risks, companies need to implement robust data protection protocols. This includes encrypting sensitive video content, anonymizing personal data when possible, and ensuring that all data handling practices comply with regulations like GDPR and CCPA.

Bias and Discrimination

AI is often seen as a neutral, objective tool, but AI systems can perpetuate bias if they are not carefully designed. AI models learn from the data they are trained on, and if that data reflects societal biases—whether racial, gender-based, or otherwise—the AI can unintentionally replicate those biases in its decision-making.

For instance, AI systems that recommend videos based on past behavior might inadvertently prioritize content from certain demographic groups, excluding others. In the context of enterprise video content management, this could manifest in training videos that disproportionately represent one gender or ethnicity while ignoring the needs of others.

To ensure that AI tools do not perpetuate bias, businesses must take steps to:

- Use diverse datasets to train AI models.

- Conduct regular bias audits to detect and address any bias in the system.

- Implement human oversight to validate AI-driven decisions, especially regarding content curation or moderation.

Content Ownership and Intellectual Property

As AI becomes more involved in video editing and content creation, the issue of content ownership becomes increasingly complex. In traditional video content management, ownership is relatively straightforward—the creator or organization that produces the content owns the rights. However, with AI tools that generate or edit video content autonomously, who owns the resulting material?

This question has significant legal and ethical implications. For example, if an AI system is used to edit or remix a video, should the company that owns the AI or the original creator of the content retain ownership? The line becomes blurred, especially when AI makes creative decisions.

Enterprises need to develop clear policies around intellectual property in the context of AI, ensuring that the ownership of content created or edited by AI is clearly defined and legally enforceable.

Transparency and Accountability

AI's most significant ethical challenge is its need for more transparency. AI systems, particularly those that rely on deep learning algorithms, can often act as “black boxes” — meaning the rationale behind their decisions is unclear. This lack of explainability can create problems, primarily when AI systems are used to moderate content or decide what video content is recommended or removed.

To address this, businesses must prioritize explainable AI—the practice of developing AI systems that can provide understandable and traceable reasons for their decisions. This ensures that businesses can remain accountable for their AI systems' actions and make corrections when necessary.

Employee Surveillance and Autonomy

AI’s use in employee surveillance raises significant ethical concerns. Many companies employ AI-driven video surveillance systems to monitor employee productivity, track movements, or ensure security. However, this can infringe upon employee privacy and autonomy.

Employees may feel uncomfortable or exploited if they know AI is constantly monitoring their actions. Businesses must find a balance between security and employee privacy. Transparent communication about surveillance policies, obtaining proper consent, and ensuring that AI tools are used ethically are critical steps in maintaining employee trust.

Navigating the Ethical Landscape

As AI continues to revolutionize Video Content Management, businesses face the challenge of balancing technological innovation with ethical responsibility. Navigating this landscape requires a proactive approach that incorporates Ethical AI Practices, transparent decision-making, and robust data protection measures. Here’s how enterprises can ethically and effectively manage AI integration in video content management.

Building Ethical AI Frameworks

To address these ethical challenges, businesses need to establish ethical AI frameworks that guide the responsible use of AI. These frameworks should include:

- Clear guidelines on how AI can be used ethically, including data privacy protocols and content moderation standards.

- Bias mitigation strategies include using diverse datasets and conducting regular bias audits.

- Human oversight mechanisms to ensure that AI decisions are fair and transparent.

- Employee engagement, including involving staff in conversations about the ethical use of AI.

Data Protection Measures

AI systems often require access to vast amounts of data, which increases the risk of data breaches and unauthorized access. To protect sensitive video content, businesses should:

- Encrypt video data to ensure it is secure during transmission and storage.

- Anonymize personal data where possible to protect individuals' identities.

- Develop clear data retention policies that limit how long video content is stored and how it is used.

Compliance with regulations such as GDPR or CCPA is essential to ensuring that businesses meet their legal obligations and protect user privacy.

Ensuring Bias-Free AI

To prevent bias in AI systems, businesses should:

- Use diverse and representative data to train AI models, ensuring that all groups are fairly represented.

- Regularly audit AI systems for bias and adjust models as needed.

- Implement human checks to validate AI recommendations and ensure they do not inadvertently discriminate against certain groups.

Transparency and Explainability in AI

Ensuring transparency and accountability in AI systems requires:

- Designing AI models that can provide explainable and traceable reasons for their decisions.

- Ensuring that AI decisions are documented and accessible, allowing businesses to review and justify their actions when necessary.

- Encouraging human oversight to validate AI decisions, especially regarding content moderation or recommendations.

Employee Rights and Surveillance Best Practices

When implementing AI surveillance, companies should:

- Be transparent about their surveillance practices and obtain explicit consent from employees.

- Use surveillance tools ethically, ensuring they are necessary for security or productivity and not an invasion of privacy.

- Respect employee autonomy, ensuring that surveillance does not create a culture of distrust.

Final Thoughts On Ethical AI in Video Content Management

The integration of AI in Video Content Management is revolutionizing how enterprises store, organize, and analyze video data. From automated tagging to personalized content recommendations, AI offers unprecedented efficiency and scalability. However, with these advancements come ethical challenges, including privacy concerns, algorithmic bias, and questions of transparency and accountability.

To navigate this complex landscape, businesses must prioritize Ethical AI Practices that balance innovation with responsibility. This involves implementing transparent decision-making processes, conducting regular bias audits, ensuring data privacy compliance, and maintaining human oversight in critical AI-driven decisions.

As AI continues to shape the future of video content management, adopting Ethical AI is no longer optional—it’s a strategic imperative. By embedding ethical principles into AI frameworks, enterprises can harness the power of AI while safeguarding user privacy, promoting fairness, and maintaining accountability.

Ultimately, responsible Video Content Management powered by Ethical AI ensures that organizations not only maximize productivity and operational efficiency but also foster trust, inclusivity, and long-term sustainability.

Embrace the future of video content management with ethical AI practices. Contact Us to learn how VIDIZMO can help you implement secure and ethical AI-powered video solutions. Start Your Free Trial today and experience the power of responsible AI in video content management.

Stay ahead of the curve with transparent, accountable, and ethical AI practices.

People Also Ask

What are the ethical challenges of using AI in Video Content Management?

AI in Video Content Management raises ethical challenges such as data privacy concerns, algorithmic bias, transparency issues, and content ownership disputes. These challenges necessitate robust Ethical AI Practices to ensure responsible and fair usage.

How can businesses implement Ethical AI in Video Content Management?

Businesses can implement Ethical AI by using diverse datasets, conducting regular bias audits, ensuring data privacy compliance, and maintaining human oversight in AI decision-making processes.

Why is transparency important in AI-driven Video Content Management?

Transparency ensures accountability and trust. It allows users to understand how AI algorithms make decisions, reducing bias and ensuring ethical content recommendations.

What is the impact of AI bias in Video Content Management?

AI bias can lead to unfair content recommendations, lack of diversity, and exclusion of certain user groups, affecting the credibility and effectiveness of video content management systems.

How can companies protect data privacy in AI-powered Video Content Management?

Companies can protect data privacy by implementing encryption, anonymizing personal data, complying with regulations like GDPR and CCPA, and using secure data storage solutions.

Who owns AI-generated video content in enterprises?

Ownership of AI-generated content typically resides with the company that owns the AI system, but legal frameworks around intellectual property rights may vary by jurisdiction.

What are Ethical AI Practices in Video Content Management?

Ethical AI Practices include transparent decision-making, proactive bias mitigation, data privacy protection, and ensuring accountability and fairness in AI-driven video content management.

How can AI be used responsibly in Video Content Management?

Responsible AI use involves transparent algorithms, diverse training datasets, regular audits for bias, and human oversight to ensure ethical content moderation and recommendation.

What is the role of bias audits in Ethical AI?

Bias audits help identify and mitigate biases in AI algorithms, ensuring fair and inclusive video content recommendations and enhancing the credibility of AI systems.

Why is Ethical AI important for Video Content Management?

Ethical AI is crucial for maintaining user trust, ensuring fair content recommendations, protecting data privacy, and complying with regulatory standards in video content management.

Jump to

You May Also Like

These Related Stories

The Ultimate Guide to LGPD Compliance

How AI-Powered Video Solutions Give Businesses an Edge in 2025

No Comments Yet

Let us know what you think